GML newsletter. Issue #2: Laplacians, Scaling GNNs, KDD, MLSS, Books, and more!

Welcome to the 2nd issue of GML newsletter!

The summer is over 🌄 and it’s time to get back to school 🏫 (or to self-study with this newsletter).

In today’s email you will gain some intuition of graph Laplacians and learn about new ways to scale your graph neural networks. You can also watch videos by top researchers from summer schools or read the digests of the past conferences and different workshops. And finally, you can register for cool upcoming events that will be valuable for graph researchers.

Let’s go!

Blog posts

A seemingly simple question “What does a graph Laplacian represent❓” was recently raised in mathoverflow, leading to a pool of good explanations and blog posts, including László Lovász’s link to random walks, Muni Pydi’s step-by-step procedure to derive graph Laplacian, as well as David Childers’s interpretation of the graph Laplacian from the perspectives of functional analysis, probability, statistics, differential equations, and topology.

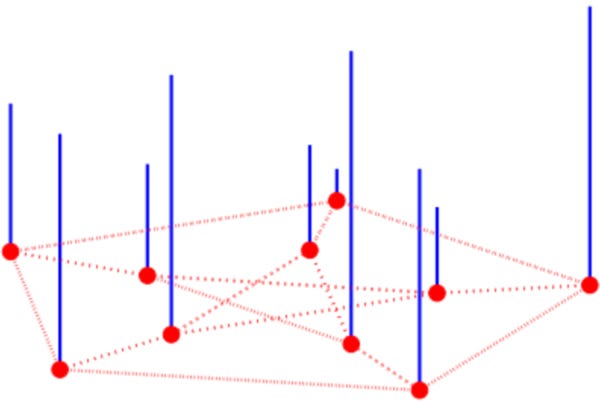

A graph function over the vertices of the Peterson graph.

Michael Bronstein continues a marathon 🏃♀️ of great blog posts on GML. In a new post, he describes their recent work on scaling GNNs to large networks, with introduction to sampling-based methods (e.g. SAGE, GraphSAINT, ClusterGCN), which smartly sample a subgraph for each batch of the training. Then, he describes that it can be beneficial to precompute r-hop matrices, A^r X, and use MLP on these features. The algorithm is already available in pytorch-geometric.

SIGN architecture. The key to its efficiency is the pre-computation of the diffused features (marked in red).

Pennylane, a python library for a quantum machine developed at Xanadu, now has an example of quantum graph recurrent neural networks (QGRNN), which are the quantum analogue 👩🔬 of a classical graph recurrent neural network, and a subclass of the more general quantum graph neural network (QGNN). Both the QGNN and QGRNN were introduced in the paper (2019) by Google X.

A visual representation of one execution of the QGRNN for one piece of quantum data.

Last but not the least, Bastian Rieck made a post on topological data analysis papers at ICML 2020 that includes graph filtration techniques, topological autoencoders, and normalizing flows.

Videos

With the target to bring the best ML/AI environments closer to Indonesia 🌏, Machine Learning Summer School - Indonesia gathered top researchers to talk about latest advancements in ML. Among others Daniel Worrall spoke about equivariance and inductive biases (slides are here), diving into group theory and convolutions, while Max Welling touched on graphical models and graph neural networks, describing difficulties of applying convolutions in manifolds and curved spaces. Check out the full schedule here.

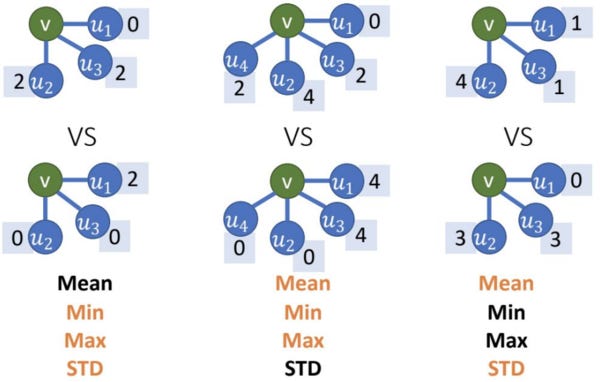

Petar Veličković also made a presentation about their recent work Principal Neighbourhood Aggregation for Graph Nets that extend theoretical framework (initiated by the GIN paper) to continuous features that appear frequently in the real world 🌐. One of the messages is that we may need to go beyond just sum aggregators of neighborhoods, to min/max and normalized moments aggregations.

Aggregations that are injective (black) and non-injective (orange) for top-bottom graph pairs.

Events

August was rich 💰 in the events that are relevant to our community. In addition to MLSS-Indo described above, there were KDD conference, many graph-related workshops, and JuliaCon. Let’s have a sneak peek at those.

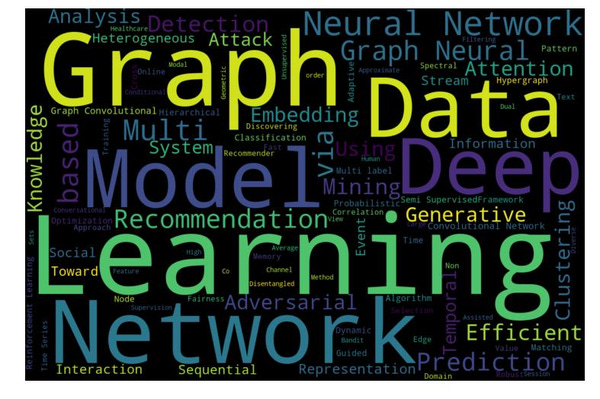

Papers related to graphs made around 30% of all accepted papers at KDD 2020. Among those, the most frequent theme is the development of new graph neural network models for various practical applications (e.g. molecule prediction 🧪 or recommender systems). Another topic that occurred several times is how to tackle the computational complexity of GNN models (e.g. via PageRank, minimal variance sampling, big, small, and redundancy-free models). And other papers solve various topics in graph mining such as clustering, drawing, summarization. For more highlights of KDD 2020, you can check my recent post.

Graphs and recommendations are traditionally two big topics at KDD.

Of particular importance to GML community are the workshops that are usually the best place to meet like-minded colleagues 🎓 to discuss the latest problems that they are working on. MLG workshop had a great line-up of speakers talking about applications of GNNs to algorithmic reasoning, cybersecurity, healthcare and other important topics (videos can be found here). Mining Knowledge Graph workshop and Knowledge Graphs and E-commerce workshop in turn had a number of keynotes speaking of applications of KG to medical, biological, and consumer domains.

Israeli Geometric Deep Learning workshop gathered many great researchers who spoke about recent works on deep learning on non-Euclidean domains such as sets, graphs, point clouds, surfaces and their applications to computer vision 👀, graphics, 3D modeling, etc. The video of the workshop is available online.

While Python 🐍 is a default language for analyzing graphs, there are numerous other languages that provide packages for dealing with graphs. In the recent JuliaCon, devoted to a programming language Julia, many talks were about new graph packages with applications to transportation networks, dynamical systems, geometric deep learning, knowledge graphs, and others.

Books

With the difference of one day 2 (!) books 📚 were announced.

Graph Representation Learning book by Will Hamilton, which so far has 3 main chapters on node embeddings, GNNs, and generative models.

Deep Learning on Graphs book by Yao Ma and Jiliang Tang. This should be available this month and should focus on the foundations of GNNs as well as applications.

Upcoming events

Make sure to register for those in advance.

The 28th International Symposium on Graph Drawing and Network Visualization will be held online from Sep. 16 to 18, free of charge. This venue will have many insightful talks about new techniques for visualizing graphs 🎨.

Data Fest by Open Data Science is a recurring data science conference, organized by Russian-speaking community. This year (19-20 Sep.) the conference will be global, free of charge, and fully-online. Unlike other academic conferences, Data Fest has a mix of researchers, practitioners, and industry leaders 👨🏫. I organize a section on Graph ML and we have 10 great speakers who will talk about embeddings, knowledge graphs, nearest neighbors search, and many other exciting areas. So feel free to register and tune in.

That’s all for today. Thanks for reading!

Feedback 💬 As always if you have something to say about this issue, feel free to reply to this email. Likewise, contribute 💪to the future newsletter by sending me relevant content. Subscribe to my telegram channel about GML, Medium and twitter. And spread the word among your friends by emailing this letter or by tweeting about it 🐦