GML Newsletter - Issue #3: Large Hadron Collider, Hyperfood, and Manifold Learning.

Welcome to the 3rd issue of GML newsletter!

I hope your papers got accepted to NeurIPS and submitted to ICLR, so that you can take a cup of coffee ☕ and enjoy the latest updates in the field of GML.

In today’s email, you will see the next big bets for GNNs and learn how you can create a graph with neural networks. You will also find new ongoing courses that study GNNs or watch a couple of videos about many applications of graphs and prepare for the next graph ML events 🌟.

Let’s go!

Blog posts

Going Big in Particle Discovery

The CMS detector at the Large Hadron Collider takes billions of images of high-energy collisions every second to search for evidence of new particles. Graph neural networks expeditiously decide which of these data to keep for further analysis. Photo: CERN

We have already seen GNNs being applied to complex physics, autonomous driving, and drug design, but the US-based physics lab Fermilab in a new blog post discusses probably the biggest application of GNNs: to discover new particles in the data from the Large Hadron Collider (LHC) 💥. The goal is to analyze relationships between pixels in a large number of images in order to decide whether to keep an image for processing later. They hope to have GNNs functional by the time the third run of the LHC in 2021.

Manifold Learning 2.0

Previous approach of first embedding a graph and then applying ML model is now replaced with end-to-end GNN training.

One of the hot topics this year is the construction of a graph from unstructured data (e.g. 3d points or images). In a new post, Michael Bronstein asks how can we meaningfully create a graph from unstructured data (e.g. 3d points or images 📷) and suggests that using GNNs both to learn the structure of the graph and to solve the downstream tasks (e.g. in DGM) can be a better alternative than a de-coupled approach (e.g. DGCNN).

GNNs are on the Maps

Researchers at DeepMind have partnered with the Google Maps team to improve the accuracy of real time ETAs by using GNNs.

DeepMind for Google released a blog post on how you can apply GNNs to predict traffic for Google Maps 🗺. While there are not many exact details about the models they used, a few interesting can be found such as (i) using sampling strategies for training, (ii) using RL to select which subgraph should go to each batch, and (iii) using parametric learning rate for training.

What’s in the menu today?

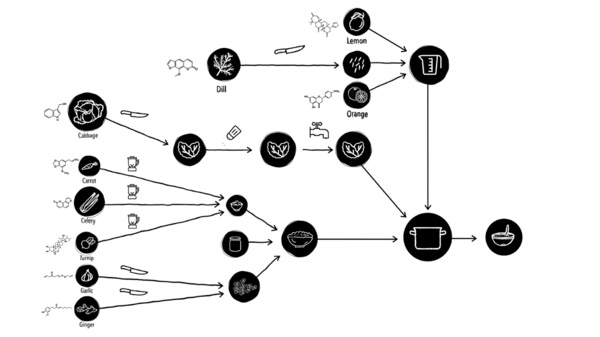

Food preparation as a computational graph with cooking transformations modeled as edges, and optimize it by choosing such operations that preserve in the best way the anti-cancer molecular composition.

Michael Bronstein, Kirill Veselkov, and Gabriella Sbordone present an idea of hyperfood 🥗 – the food that contains thousands of bioactive molecules, some of which are similar to anti-cancer drugs. In their journal paper at Nature Scientific Reports they apply a learnable diffusion process to hunt for anti-cancer molecules in food using protein-protein and drug-protein interaction graphs. Soon they hope to apply graph ML to generate recipes that strike the optimal balance between health, taste, and maybe even aesthetics.

Videos

Graph ML at Data Fest 2020

Videos of Graph ML from Data Fest 2020, a data science conference, are open now 🔑. This year speakers from the industry and academy talked about kNN search on graphs, graphical models, unsupervised embeddings, knowledge graphs, graph visualization, and many other exciting directions of graphs.

3DGV Seminar

3DGV Seminar is a weekly lecture on 3D Geometry 💠and Vision. One of the seminars was presented by Michael Bronstein who was talking about inductive biases on graphs, the history of GNN architectures, and several successful applications.

Can you trust your GNN?

Stephan Günnemann as a keynote at ECML-PKDD gave a presentation about robustness for SOTA graph-based learning techniques, highlighting the unique challenges and opportunities 💸 that come along with the graph setting.

Courses

GNN course at UPenn

In addition to cs224w at Stanford and COMP 766 at McGill (both should happen next semester), there is an ongoing course on Graph Neural Networks at the University of Pennsylvania 🦔 by Alejandro Ribeiro, who worked on graph ML and graph signal processing. The course covered so far permutation equivariance, graph convolutional filters, empirical risk minimization, and graph neural networks.

NYC Deep Learning course

Deep Learning course at NYC 🗽 instructed by Yann LeCun and Alfredo Canziani have two final lectures on graph convolutional networks (by Xavier Bresson) and deep learning for structured predictions. It covers spectral convolutions, energy-based factor graphs, and graph transformer networks. In addition to lectures, there are also practical sessions and exercises.

Events

CIKM 2020

A conference on information and knowledge management (CIKM) will happen 19-23 October online with a number of research and applied papers, tutorials and workshops about graph neural networks, knowledge graphs, and general algorithms 🔢.

That’s all for today. Thanks for reading!

Feedback 💬 As always if you have something to say about this issue, feel free to reply to this email. Likewise, contribute 💪to the future newsletter by sending me relevant content. Subscribe to my telegram channel about GML, Medium and twitter. And spread the word among your friends by emailing this letter or by tweeting about it 🐦